NeRF - Ongoing

NeRF (Neural Radiance Fields) has brought way to 3D Reconstruct the scene by few 2D multiview images. NeRF utilizes fully-connected neural network to trained on 5D Input (viewing direction and spatial location) which provides 4D Output (Color and Opacity) using Volume Rendering ro render noval views.

Under Dr. Vijeta Khare's supervision, I integrated the NeRF model referring to ECCV 2020 paper by Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, et al.

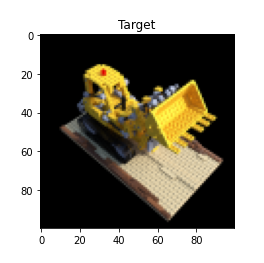

The 360-degree multiview images of an object are transformed into a .npz file using COLMAP, which consists of images, poses, and focal lengths.

I computed the ray origin and ray direction from this data to obtain further query-points (x, y, z) and depth values (theta, delta).

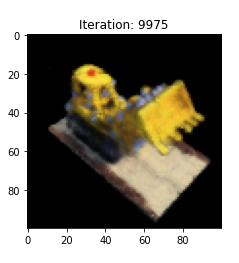

I use position encoding to transform the obtained query-points and depth values into higher dimensional space. Then, we apply MLP (Multilayer Perceptron) to generate a radiance field in 3D space (rgba) as an output.

For loss computation, we transform MLP output to image output (rgb) using Volume Rendering to compute loss.

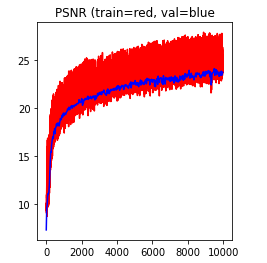

Because of the restricted compute resources available for training, I am currently attempting to cut the training time and exploring for alternatives like Mip-NeRF, KiloNerF, etc. to trained on custom data built using Colmap and LLFF.

Next Project

ARCard - Smart Card